Site Speed is important for Google

- Apr 12, 2010 modified: Jan, 14 2025

Site Speed is important for Google

updated Jan 2025

Google is placing more importance on the speed of a website. For developers who outsource or use some slow loading open source systems this is going to make their sites perform slower and get crawled by Google less often. Sites with many advertisements or other additions may also be affected. The software available for web developers is always improving exponentially.

The notion that a web builder system can keep pace with an experienced web developer using advanced software is absurd.

Check some SEO info on site speed where it discusses the use of plugins often not used correctly by developers. Some developers shortcut and use other peoples code rather than obtain the skills themselves.

Have you ever noticed how some sites load much faster than others with seemingly the same type of page?

Many of the tools developers use to "extend" their skills actually come with a speed overhead.

If the developer does not know which parts work on which page they will often load everything including unnecessary code on every page.

Some of these scripts are written to perform many functions none of which may be included on that particular page.

A poorly created web page is like running up a hill. Its slower and makes you feel heavier.

Matt Cutts of Google mentioned in 2010 that Google will be using site speed in search rankings in his blog.

source Google Presentation Demystifying Speed Tooling

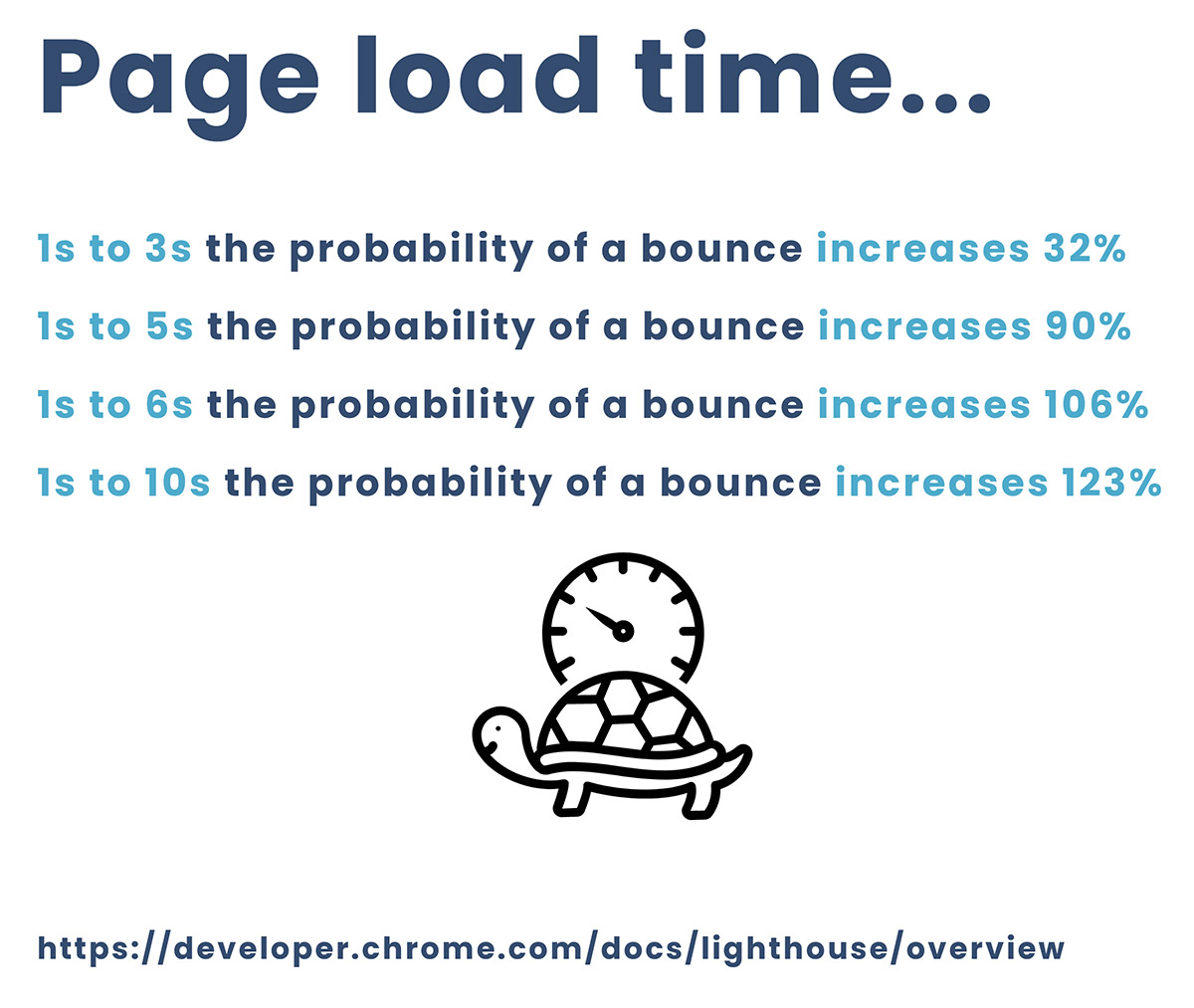

Google Analytics describe bounce rate as "Bounce Rate is the percentage of single-page visits (i.e. visits in which the person left your site from the entrance page)." Now we focus on engagement - a page someone lands on and does not engage.

Servers and Location

Locating your data centre servers as close as possible to your clients makes sense. The term latency refers to "the delay before a transfer of data begins following an instruction for its transfer". This is magnified when it comes to loading a webpage because -:

Everything you do on the internet is done in packets. This means that every webpage that you receive comes as a series of packets.

The closer the server is to your customer the quicker the page will load - all other factors being equal.

Our 4 server locations

At GoldCoastLogin.com.au we have 4 geographically dispersed server locations. All servers are configured to serve static pages as static pages and dynamic pages as dynamic pages. Some systems server all pages out of a database even if they do not change. Wordpress and other systems have issues.

Monitoring Database Connections

Our systems are built around minimalising database connections use. We configure connection pools for busy pages. MySQL can be monitored for the number of connections in use at any one time.

SHOW PROCESSLIST

This is a very useful command and will display a set of active connections and the databases they are connected to. This is very handy for monitoring resources and detecting spam attacks.

Case study - Hearing Aids site

www.hearingaidsaustralia.com is a simple site that performs very well in searches. Check it on a search for "Hearing Aid Prices Australia".

It uses minimalistic code which allows it to load faster and get crawled easier in Google.

It out performs many other sites targeting the Hearing Aid market largely because of its structure and minimalistic code.

Learn more about the importance of building a site with SEO advantages.

Test your site speed

Google provides a site speed analysis tool that you can test the speed of your site. As web developers we highly optimise code and the servers to give a better user experience because nobody likes a slow website.

Pingdom have a useful tool that quickly shows the problems with a website and offers solutions-:

Page size this is a figure in bytes or megabytes and shows the size of the page - the lower the better

Requests there is latency and overhead in every request for a resource - pages with large numbers here are often loading unnecessary (unused) scripts.

If your website has a high page size and loads many requests think of a redesign. Even if the Performance grade is ok the site is still loading unnecessary files.

Bots are scraping your content and slowing the Internet

Estimates of the amount of Internet traffic now used by bots are between 42% - 50%. That could mean that half of your server load is going to bots.

Search News Articles...

Recent Articles

Unique Web Systems Matter in a World of Sameness

- Dec 26 2025

- /

- 19

Most AI Websites Fail to Rank

- Nov 18 2025

- /

- 250

Sitemap.xml Best Practices

- Oct 14 2025

- /

- 833

Fake Reviews on Google My Business

- Oct 07 2025

- /

- 469

Sending Emails from Code

- Sep 17 2025

- /

- 568

US Tariff Shifts Undermining eCommerce

- Sep 05 2025

- /

- 696

Small Business Success Formula

- Aug 23 2025

- /

- 534

Do Strong CTAs Help or Hurt Your Website?

- Jul 31 2025

- /

- 728

AI Crawlers vs Search Crawlers

- Jul 04 2025

- /

- 923

AI vs. Human Writing - How to detect Ai

- Jun 26 2025

- /

- 1305

View All News Articles

Categories

A Gold Coast SEO and Web Developer